Our household uses Apple’s Time Machine backup system as one of our backup methods. Various Macs are set to run their backups at night (we use TimeMachineEditor to schedule backups so they don’t take up local bandwidth or computing resources during the day) and everything goes to a Synology NAS (basically following the steps in this great guide from 9to5Mac).

But, Time Machine doesn’t necessarily complete a successful backup for every machine every night. Maybe the Mac is turned off or traveling away from home. Maybe the backup was interrupted for some reason. Maybe the backup destination isn’t on or working correctly. Most of these conditions can self-correct within a day or two.

But, I wanted to have a way to be notified if any given Mac had not successfully completed a backup after a few days so that I could take a look. If, say, three days go by without a successful backup, I start to get nervous. I also didn’t want to rely on any given Mac’s human owner/user having to notice this issue and remember to tell me about it.

I decided to handle this by having a local script on each machine send a daily backup status to a centralized database, accomplished through a new endpoint on my custom webhook server. The webhook call stores the status update locally in a database table. Another script runs daily to make sure every machine is current, and sends a warning if anyone is running behind.

Here are the details in case it helps anyone else.

First, here’s the script that runs once every morning on each Mac, to send the latest backup date to the webhook server:

#!/bin/bash

# Send the last successful backup timestamp to the webhook server for further reporting/monitoring

HOST=$(hostname)

LAST_BACKUP_TIMESTAMP=$(tmutil latestbackup | rev | cut -d "/" -f 1 | rev | sed -e 's/-[0-9]\{6\}$//')

WEBHOOK_URL=https://my.special.webhook/endpoint

curl -s -H "Content-Type:application/json" -X POST --data "{\"type\": \"backup\", \"host\": \"$HOST\", \"data\": \"$LAST_BACKUP_TIMESTAMP\"}" $WEBHOOK_URL

exit;

tmutil is the Mac-provided utility to manipulate Time Machine backups on the command line, and the latestbackup argument just returns the path to the latest backup snapshot. That path contains the date of the backup, so we use some cutting and reversing and stream editing to extract that date into a variable.

This script is run via cron once per day:

# Send latest successful backup job to webhook server 0 8 * * * /path/to/time-machine-last-backup-check.sh

Note that newer versions of macOS limit access to Time Machine Backup data. If you see a message like this in your cron output:

No machine directory found for host. The operation could not be completed because tmutil could not access private application data on the backup disk. Use the Privacy tab in the Security and Privacy preference pane to add Terminal to the list of applications which can access Application Data.

then you may need to add “Terminal.app” to the list of applications that can access Application Data. Please research any security concerns or risks this might present for your specific setup before doing this.

To get things set up on the webhook server, I created a database table:

CREATE TABLE events ( id int auto_increment primary key, created_at timestamp default current_timestamp, type varchar(100), host varchar(200), data text );

and then created a script that will process the webhook payload and insert it into the database:

#!/usr/bin/php

<?php

/**

* Log received events

*/

if ( empty( $argv[1] ) ) {

echo 'No payload, exiting.' . "\n";

die;

}

$pl = json_decode( $argv[1] );

$conn = new mysqli('localhost', 'event_database_user', 'event_database_password', 'event_database');

if ($conn->connect_error) {

die("Connection failed: " . $conn->connect_error);

}

$stmt = $conn->prepare("INSERT INTO events (type, host, data) VALUES (?, ?, ?)");

$stmt->bind_param("sss", $type, $host, $data);

$type = empty($pl->type) ? 'backup' : $pl->type;

$host = empty($pl->host) ? 'unknown' : $pl->host;

$data = empty($pl->data) ? '' : $pl->data;

$stmt->execute();

exit;

The webhook configuration that handles passing the request to this script looks something like this:

{

"id": "receive-event-entry",

"execute-command": "/path/to/webhook-add-events-log.php",

"command-working-directory": "/var/tmp",

"pass-arguments-to-command":

[

{

"source": "entire-payload",

}

]

"trigger-rule":

{

"match":

{

"type": "value",

"value": "my-private-key-goes-here",

"parameter":

{

"source": "url",

"name": "key"

}

}

}

},

We use a private key parameter in the webhook URL so that only hosts who know that key can submit events to the webhook endpoint.

Then, as the days pass, I start seeing event entries in the database table:

MariaDB [webhooks]> select * from events; +----+---------------------+---------+---------+-------------------+ | id | created_at | type | host | data | +----+---------------------+---------+---------+-------------------+ | 12 | 2020-10-22 16:21:05 | backup | host1 | 2020-10-21 | | 13 | 2020-10-23 09:31:38 | backup | host2 | 2020-10-23 |

Finally, I have another job run on the same server where the database table lives, to notify me of outdated backups:

<?php

$hosts = array(

'host1',

'host2',

);

$conn = new mysqli('localhost', 'event_database_user', 'event_database_password', 'event_database');

if ($conn->connect_error) {

die("Connection failed: " . $conn->connect_error);

}

foreach ($hosts as $host) {

$query_result = $conn->query( "select cast(data as DATE) as backup_date from events where type = 'backup' and host = '" . $conn->real_escape_string( $host ) . "' order by backup_date desc limit 1" );

$last_backup = $query_result->fetch_array();

$last_backup_date = $last_backup['backup_date'];

if ( strtotime('-3 days') > strtotime( $last_backup_date ) ) {

post_to_slack( 'Warning: Last Time Machine backup for host ' . $host . ' happened too long ago, on ' . $last_backup_date . '.' );

}

}

exit;

function post_to_slack( $message = '' ) {

...

}

(That script is a little rough around the edges, I know.)

It also runs daily via cron:

# Warn about outdated laptop backups 30 8 * * * /usr/bin/php /path/to/warn-on-outdated-backups.php

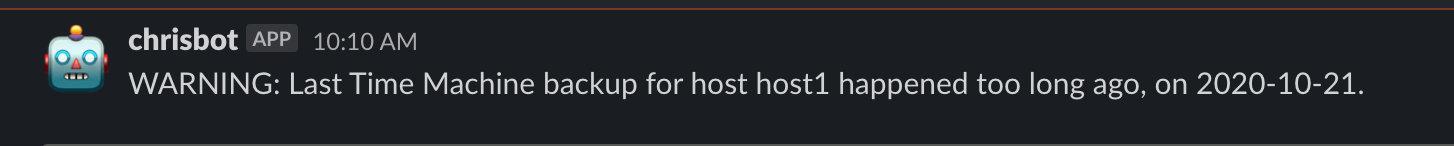

The end result is a nice Slack message I can act on (or ignore, if this is an expected result):

Obviously you could route those messages however you see fit for your particular needs.

I was intentional in keeping the database structure kind of generic so that I could re-use this event submission/tracking setup for other kinds of things. For example, I could imagine logging other generic events from local devices, our smart home system, etc. that I might want to query and report on later.

These scripts could definitely be made more robust, and it won’t handle every scenario, but it’s a nice safety net for our home backup setup.