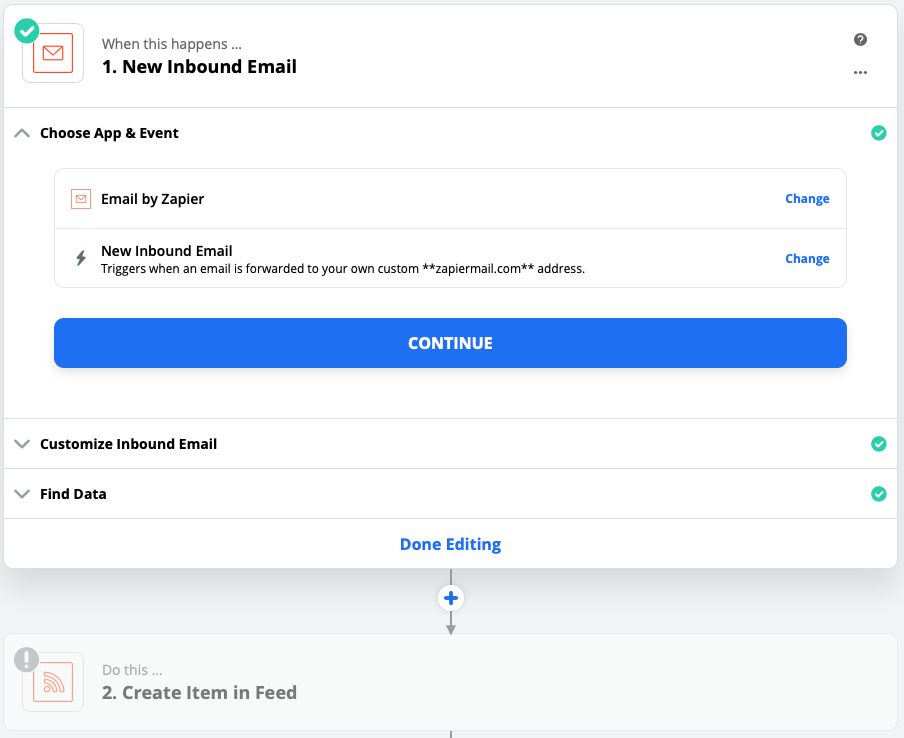

I needed to generate a valid RSS feed from a Twitter user’s timeline, but only for tweets that matched a certain pattern. Here’s how I did it using PHP.

First, I added the dependency on the TwitterOAuth library by Abraham Williams:

$ composer require abraham/twitteroauth

This library will handle all of my communication and authentication with Twitter’s API. Then I created a read-only app in my Twitter account and securely noted the four key authentication items I would need, the consumer API token and secret, and the access token and secret.

Now, I can quickly bring recent tweets from my target Twitter user into a PHP variable:

require "/path/to/vendor/autoload.php" ;

use Abraham\TwitterOAuth\TwitterOAuth;

$consumerKey = "your_key_goes_here"; // Consumer Key

$consumerSecret = "your_secret_goes_here"; // Consumer Secret

$accessToken = "your_token_goes_here"; // Access Token

$accessTokenSecret = "your_token_secret_goes_here"; // Access Token Secret

$twitter_username = 'wearrrichmond';

$connection = new TwitterOAuth( $consumerKey, $consumerSecret, $accessToken, $accessTokenSecret );

// Get the 10 most recent tweets from our target user, excluding replies and retweets

$statuses = $connection->get(

'statuses/user_timeline',

array(

"count" => 10,

"exclude_replies" => true,

'include_rts' => false,

'screen_name' => $twitter_username,

'tweet_mode' => 'extended',

)

);

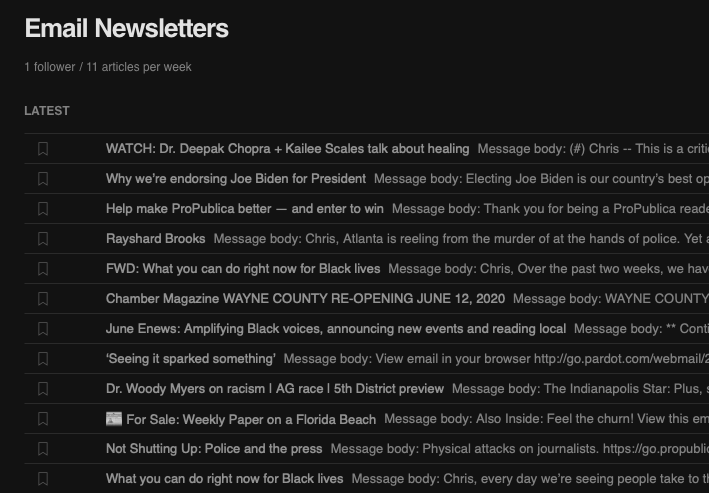

My specific use case is that my local public school system doesn’t publish an RSS feed of news updates on its website, but it does tweet those updates with a somewhat standard pattern: the headline of the announcement, possibly followed by an at-mention and/or image, and then including a link back to a PDF file on their website that lives in a certain directory. I wanted to capture these items for use on another site I created to aggregate local news headlines into one place, and it mostly relies on the presence of an RSS feed.

So, I only want to use the tweets that match this pattern in the custom RSS feed. Here’s that snippet:

// For each tweet returned by the API, loop through them

foreach ( $statuses as $tweet ) {

$permalink = '';

$title = '';

// We only want tweets with URLs

if ( ! empty( $tweet->entities->urls ) ) {

// Look for a usable permalink that matches our desired URL pattern, and use the last (or maybe only) one

foreach ( $tweet->entities->urls as $url ) {

if ( false !== strpos( $url->expanded_url, 'rcs.k12.in.us/files', 0 ) ) {

$permalink = $url->expanded_url;

}

}

// If we got a usable permalink, go ahead and fill out the rest of the RSS item

if ( ! empty( $permalink ) ) {

// Set the title value from the Tweet text

$title = $tweet->full_text;

// Remove links

$title = preg_replace( '/\bhttp.*\b/', '', $title );

// Remove at-mentions

$title = preg_replace( '/\@\w+\b/', '', $title );

// Remove whitespace at beginning and end

$title = trim( $title );

// TODO: Add the item to the feed here

}

}

}

Now we have just the tweets we want, ready to add to an RSS feed. We can use the included SimplePie library to do this. In my case, the final output is written to an output text file, which another part of my workflow can then query.

Here’s the final result all put together:

<?php

/**

* Generate an RSS feed from a Twitter user's timeline

* Chris Hardie <chris@chrishardie.com>

*/

require "/path/to/vendor/autoload.php" ;

use Abraham\TwitterOAuth\TwitterOAuth;

$consumerKey = "your_key_goes_here"; // Consumer Key

$consumerSecret = "your_secret_goes_here"; // Consumer Secret

$accessToken = "your_token_goes_here"; // Access Token

$accessTokenSecret = "your_token_secret_goes_here"; // Access Token Secret

$twitter_username = 'wearrrichmond';

$rss_output_filename = '/path/to/www/rcs-twitter.rss';

$connection = new TwitterOAuth( $consumerKey, $consumerSecret, $accessToken, $accessTokenSecret );

// Get the 10 most recent tweets from our target user, excluding replies and retweets

$statuses = $connection->get(

'statuses/user_timeline',

array(

"count" => 10,

"exclude_replies" => true,

'include_rts' => false,

'screen_name' => $twitter_username,

'tweet_mode' => 'extended',

)

);

$xml = new SimpleXMLElement( '<rss/>' );

$xml->addAttribute( 'version', '2.0' );

$channel = $xml->addChild( 'channel' );

$channel->addChild( 'title', 'Richmond Community Schools' );

$channel->addChild( 'link', 'http://www.rcs.k12.in.us/' );

$channel->addChild( 'description', 'Richmond Community Schools' );

$channel->addChild( 'language', 'en-us' );

// For each tweet returned by the API, loop through them

foreach ( $statuses as $tweet ) {

$permalink = '';

$title = '';

// We only want tweets with URLs

if ( ! empty( $tweet->entities->urls ) ) {

// Look for a usable permalink that matches our desired URL pattern, and use the last (or maybe only) one

foreach ( $tweet->entities->urls as $url ) {

if ( false !== strpos( $url->expanded_url, 'rcs.k12.in.us/files', 0 ) ) {

$permalink = $url->expanded_url;

}

}

// If we got a usable permalink, go ahead and fill out the rest of the RSS item

if ( ! empty( $permalink ) ) {

// Set the title value from the Tweet text

$title = $tweet->full_text;

// Remove links

$title = preg_replace( '/\bhttp.*\b/', '', $title );

// Remove at-mentions

$title = preg_replace( '/\@\w+\b/', '', $title );

// Remove whitespace at beginning and end

$title = trim( $title );

$item = $channel->addChild( 'item' );

$item->addChild( 'link', $permalink );

$item->addChild( 'pubDate', date( 'r', strtotime( $tweet->created_at ) ) );

$item->addChild( 'title', $title );

// For the description, include both the original Tweet text and a full link to the Tweet itself

$item->addChild( 'description', $tweet->full_text . PHP_EOL . 'https://twitter.com/' . $twitter_username . '/status/' . $tweet->id_str . PHP_EOL );

}

}

}

$rss_file = fopen( $rss_output_filename, 'w' ) or die ("Unable to open $rss_output_filename!" );

fwrite( $rss_file, $xml->asXML() );

fclose( $rss_file );

exit;

Here’s the same thing as a gist on GitHub.

I set this script up to run via cronjob every hour, which gives me a regularly updated feed based on the Twitter account’s activity.

Several ways this could be improved include:

- Better escaping and sanitizing of the data that comes back from Twitter

- Make the filtering of the Tweets more tolerant to changes in the target user’s Tweet structure

- Genericizing the functionality to support querying multiple Twitter accounts and generating multiple corresponding output feeds

- Fixing Twitter so that RSS feeds of user timelines are offered on the platform again

If you find this helpful or have a variation on this concept that you use, let me know in the comments!

Updated July 14 2020 to add the use of “tweet_mode = extended” in the API connection and to replace the references to tweet text with “full_text”, as apparently it is not the default in the Twitter API to use the 240-character version of tweets.