So you’ve decided to publish timely content on the Internet but your website does not have an RSS feed.

Or even worse, you have played some role in designing or building a tool or service that other people use to publish timely content on the Internet, and you unforgivably allowed it to ship without support for RSS feeds.

And you probably just assume that a few remaining RSS feed nerds out there are just quietly backing away in defeat. “They’ll just have to manually visit the site every day if they want updates,” you rationalize. “They can download our app and get push notifications instead,” you somehow say with a straight face.

No.

I don’t know who you are. I don’t know what you want. If you are looking for clicks, I can tell you I don’t have any clicks left to give.

But what I do have are a very particular set of skills, skills I have acquired over a very long career. Skills that make me a nightmare for people like you.

If you publish your content in an RSS feed now, that’ll be the end of it. I will not look for you, I will not pursue you, I will simply subscribe to your feed.

But if you don’t, I will look for you, I will find you, and I will make an RSS feed of your website.

And here’s how I’ll do it.

(Psst, this last bit is a playful reference to the movie Taken, I am a nice person IRL and don’t actually tend to threaten or intimidate people around their technology choices. But my impersonation of a militant RSS advocate continues herein…)

Jump straight to a scenario and solution:

- You’re just hiding your RSS feed

- You’re hiding your RSS feed URL structure in documentation or forum responses

- You forgot that feeds are special URLs

- Your feed is malformed

- You have no feed but your content is structured

- You use asynchronous requests to fetch JSON and render your content

- You block requests from hosted servers

- You’re super in to email newsletters

- Your email newsletter has no RSS feed

- Your mobile app is where you put all the good stuff

You’re just hiding your RSS feed

Okay, heh, simple misunderstanding, you actually DO have an RSS feed but you do not mention or link to it anywhere obvious on your home page or content index. You know what, you’re using a <link rel="alternate" type="application/rss+xml" href="..." /> tag, so good for you.

I found it, I’m subscribing, we’re moving on, but for crying out loud please also throw a link in the footer or something to help the next person out.

Further reading and resources

You’re hiding your RSS feed URL structure in documentation or forum responses

Oh so you don’t have an advertised RSS feed or <link> tag but you do still support RSS feeds after all, it’s just that you tucked that fact away in some kind of obscure knowledgebase article or a customer support rep’s response in a community forum?

Fine. I would like you to feel a bit bad about this and I briefly considered writing a strongly worded email to that end, but instead I’m using the documented syntax to grab the link, subscribe and move on. Just…do better.

Further reading and resources

You forgot that feeds are special URLs

You decided to put your RSS feed behind a Cloudflare access check. Or you aggressively cached it. Or your web application firewall is blocking repeated accesses to the URL from strange network locations and user agents, which is exactly the profile of feed readers.

I understand, we can’t always remember to give every URL special attention.

But I will email your site administrator or tech support team about it, and you will open a low-priority ticket or send my request to your junk folder, and I will keep following up until you treat your RSS feed URLs with the respect they deserve.

Further reading and resources

Your feed is malformed

You somehow ended up with some terrible, custom, non-standard implementation of RSS feed publishing and you forgot to handle character encoding or media formats or timestamps or weird HTML tags or some other thing that every other established XML parsing and publishing library has long since dealt with and now you have an RSS feed that does not validate.

Guess what, RSS feeds that don’t validate might as well be links to a Rick Astley video on YouTube given how picky most RSS feed readers are. It’s just not going to work.

But I will work with that. I will write a cron job that downloads your feed every hour and republishes it with fixes needed to make it valid.

#!/bin/sh

# Define variables for URLs, file paths, and the fix to apply

RSS_FEED_URL="https://example.com/rss.xml"

TEMP_FILE="/tmp/rss_feed.broken"

OUTPUT_FILE="/path/to/feeds/rss_feed.xml"

SEARCH_STRING="Parks & Recreation"

REPLACEMENT_STRING="Parks & Recreation"

# Download the RSS feed

curl -s -L -o "$TEMP_FILE" "$RSS_FEED_URL"

# Fix invalid characters or strings in the RSS feed

perl -pi -e "s/$SEARCH_STRING/$REPLACEMENT_STRING/gi;" "$TEMP_FILE"

# Move the fixed file to the desired output location

cp "$TEMP_FILE" "$OUTPUT_FILE"

# Clean up the temporary file

rm "$TEMP_FILE"

If I want to embarrass you in front of your friends I will announce the new, valid feed URL publicly and let others use it too. But really, you should just fix your feed and use a standard library.

Further reading and resources

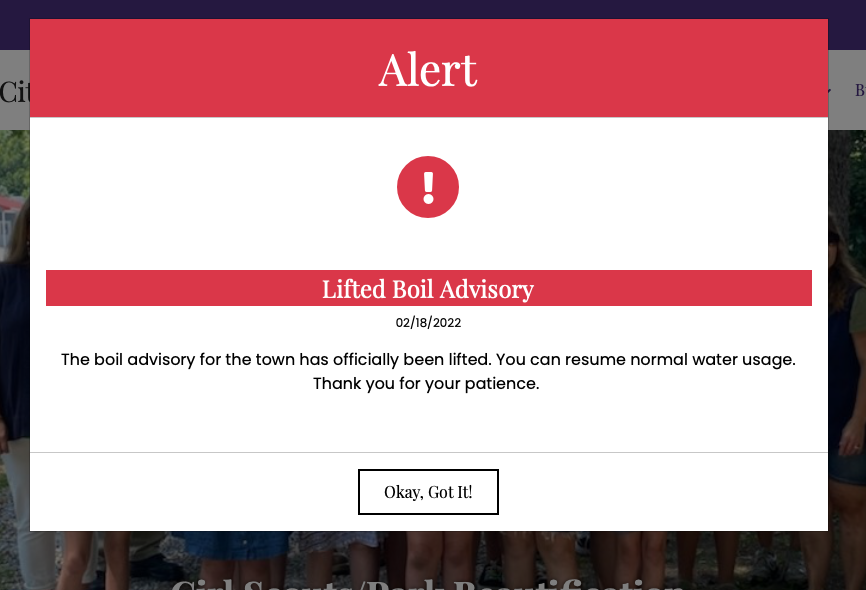

You have no feed but your content is structured

You programmatically publish your timely content in a structured format from a structured source but offer no RSS feed? What a terrible decision you’ve made for the distribution of your information, for your readers, for your stakeholders, and for the whole world.

But ’tis no matter, I’ll have an RSS feed of your site up and running shortly using a DOM crawler (e.g. Symfony’s DomCralwer component in PHP) that can navigate HTML and XML documents using XPath, HTML tags or CSS selectors to get me what I want.

Your stuff’s generated using an HTML/CSS template with a loop? Great? Or it’s even just in a plain HTML table? Let’s do this.

$nodes = $crawler->filter('.post_content table tr');

foreach ($nodes as $node) {

$tr = new Crawler($node);

$date = $tr->filter('td')->eq(0)->text();

$title_column = $tr->filter('td')->eq(1);

$title = $title_column->text();

$links = $title_column->filter('a');

if ($links->count()) {

$url = $this->resolveUrl($source, $links->first()->attr('href'));

} else {

continue;

}

$items[] = array(

'pubDate' => Carbon::createFromFormat('!m-d-Y', $date),

'title' => ucwords(strtolower($title)),

'url' => $url,

);

}

My software will programmatically visit your site every hour to see what it finds and publish an RSS feed of the latest.

Further reading and resources

You use asynchronous requests to fetch JSON and render your content

You were right there at the finish line, buddy, and you choked.

You had everything you need — structured data storage, knowledge of how to convey data from one place to another in a structured format, probably some API endpoints that already serve up exactly what you need — all so you can dynamically render your webpage with a fancy pagination system or a super fast search/filter feature.

And yet, still, you somehow left out publishing your content in one of the most commonly used structured data formats on the web. (Psst, that’s RSS that I’m talking about. Pay attention, this whole thing is an RSS thing!)

So I will come for your asynchronous requests. I will use my browser’s dev tools to inspect the network requests made when you render your search results, and I will pick apart the GET or POST query issued and the headers and data that are passed with it, and I will strip out the unnecessary stuff so that we have the simplest query possible.

If it’s GraphQL, I will still wade through that nonsense. If it uses a referring URL check as some kind of weak authentication, I will emulate that. If you set an obscurely named cookie on first page visit that is required to get the content on page visit two, be assured that I will find and eat and regurgitate that cookie.

And then I will set up a regularly running script to ingest the JSON response and create a corresponding RSS feed that you could have been providing all along. I can do this all day long.

public function generateRssItems(Source $source): RssItemCollection

{

$response = HTTP::withUserAgent($userAgent)

->get($source->source_url);

$this->searchResultsToArray($response->json(), $source);

return RssItemCollection::make($this->feedItems);

}

private function searchResultsToArray(array $results, Source $source): void

{

if (! empty($results['combined_feeds'])) {

foreach ($results['combined_feeds'] as $result) {

$this->feedItems[] = array(

'pubDate' => $this->getPubDate($result),

'title' => $this->getTitle($result),

'url' => $this->getArticleUrl($result, $source),

'description' => $this->getArticleContent($result),

);

}

return;

}

}

Further reading and resources

You block requests from hosted servers

Even though I build my content fetching tools to be good citizens on the web — at most checking once per hour, exponential backoff if there are problems, etc. — you may still decide to block random web requests for random reasons. Maybe it’s the number of queries to a certain URL, maybe it’s the user agent, or maybe it’s because they’re coming from the IP address of a VPS in a data center that you assume is attacking you.

Fine. Whatever. I used to spend time trying to work around individual fingerprinting and blocking mechanisms, but I got tired of that. So now I pay about $25/year for access to rotating residential IP addresses to serve as proxies for my requests.

I don’t like supporting these providers but when I come to make an RSS feed of your content, it will look like it’s from random Jane Doe’s home computer in some Virginia suburb instead of a VPS in a data center.

Look what you made me do.

Further reading and resources

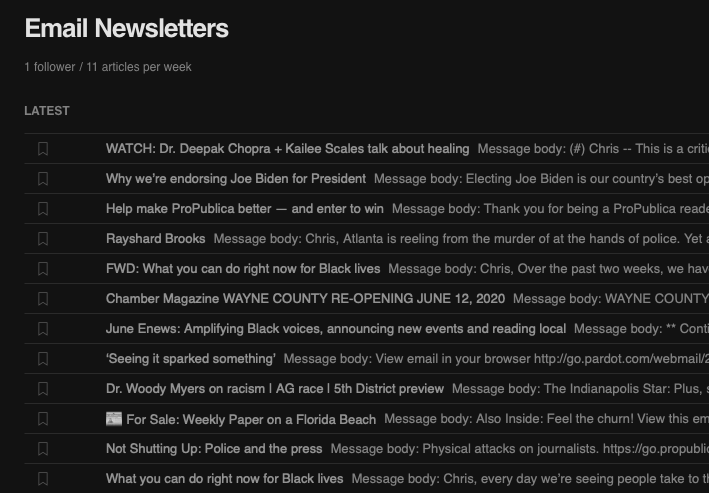

You’re super in to email newsletters

You don’t put timely new content on your website, but you sure do love your fancy email newsletters with the timely updates your audience is looking for. You like them so much that you favor them over every other possible content distribution channel, including a website with an RSS feed.

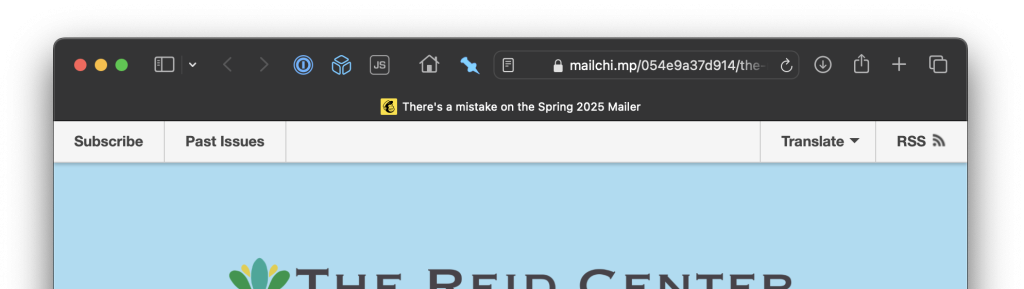

That’s okay. Fortunately, many email newsletter platforms have figured out that offering a web-based archive of past newsletter campaigns is a valuable service they can provide to, um, people like you, and many of those have figured out that including an RSS feed of said campaigns is worthwhile too.

Mailchimp puts a link to right there in the header of their web-based view of an individual campaign send unless (boo) a list owner has turned off public archives, which you should seriously NOT do unless your list really needs to be private/subscribers only.

Further reading and resources

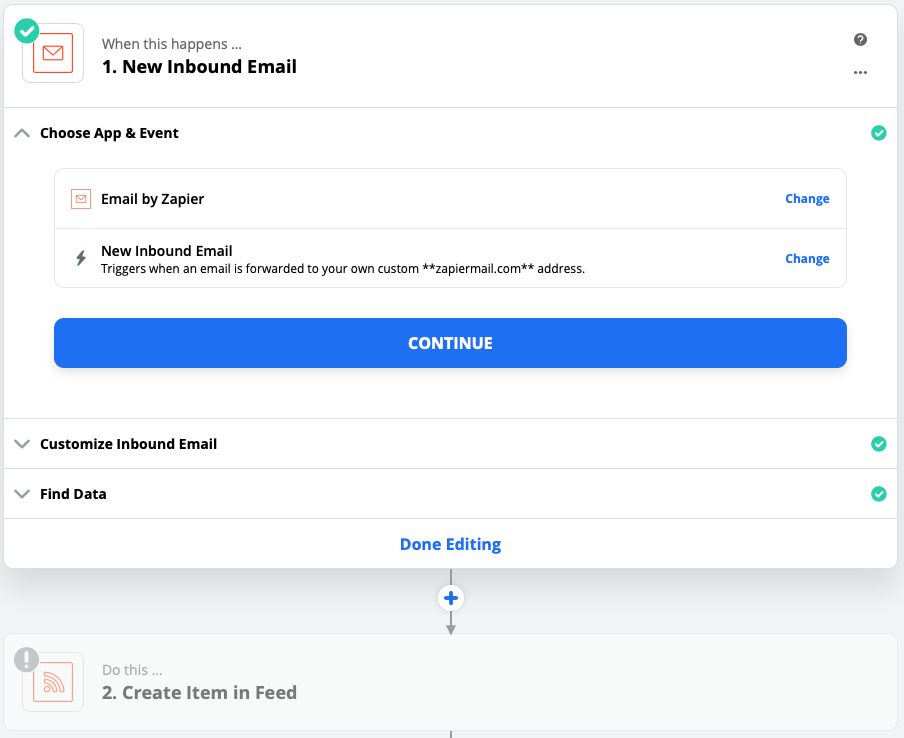

Your email newsletter has no RSS feed

You’re using an email newsletter platform that doesn’t have RSS feeds built in?

Please, allow me to make one for you. I will subscribe to your email newsletter with a special address that routes messages to a WordPress site I set up just for the purpose of creating public posts with your newsletter content. Once that’s done, the RSS part is easy given that WordPress has that support built right in.

I’ve detailed the technical pieces of that particular puzzle in a separate post:

Your mobile app is where you put all the good stuff

No RSS feed. No email newsletter. The timely content updates are locked up in your mobile app where the developers involved have absolutely no incentive to provide alternative channels you didn’t ask for.

No hope? This is probably where a reasonable person would say “nah, I’m out.” Me? I’m still here.

The nice thing is that if a mobile app is reasonably well designed and supports any kind of information feed, push notifications, etc. then it almost has to have some kind of API driving that. Sometimes the APIs are gloriously rich in the information they make available, but of course usually also completely undocumented and private.

I’ve found Proxyman to be an indispensable tool for what I need to do next:

- Intercept all web traffic from my mobile device and route it through my laptop

- Install a new certificate authority SSL certificate on my mobile device that tells it to trust my laptop as a source of truth when verifying the validity of SSL certificates for certain domains.

- Start making requests from your mobile app that loads the information I want to be in an RSS feed.

- Review the HTTP traffic being proxied through my laptop and look for a request that has a good chance of being what I want.

- Tell my laptop to intercept not only the traffic meta data but the secure SSL-encrypted traffic to the URL I care about.

- Inspect the request and response payload of the HTTPS request and learn what I need to know about how the API powering that mobile app feed operates.

It’s an amazing and beautiful process, and if it’s wrong I don’t want to be right.

If too many mobile app developers get wind of this kind of thing happening, they’ll start using SSL certificate pinning more, which builds in to the app the list of certificates/certificate authorities that can be trusted, removing the ability to intercept by installing a new one. So, you know, shhh. (Joking aside, secure all the things, use certificate pinning…but then also publish an RSS feed!)

Once I have your mobile app’s API information, it’s back to the mode of parsing a JSON response noted above, and generating my own RSS feed from it.

Further reading and resources

Escalations

There are other escalations possible, of course. Taking unstructured data and feeding it to an LLM to generate structured data. Recording and replaying all the keystrokes, mouse moves and clicks of a web browsing session. Convincing an unsuspecting IT department worker to build a special export process without really mentioning it to anyone. Scraping data from social media platforms.

I’ve done it all, none of it is fun, and none of it should be necessary.

Publish your info in a structured, standard way.

What do I do with all of these feeds?

Thanks for asking. I own a newspaper. We publish in print and online. Our job is to be aware of what’s happening in the community so we can distill what’s most important and useful in to reporting that benefits our readers. We have a very small staff tasked with keeping up with a lot of information. Aggregating at least some of it through RSS feeds has made a huge difference in our work.

Oh, you meant at the technical level? I have one Laravel app that is just a bunch of custom classes doing the stuff above and outputting standard RSS feeds. I have another Laravel app that aggregates all of the sources of community information I can find, including my custom feeds, in to a useful, free website and a corresponding useful, free daily email summary. I also read a lot of it in my own feed reader as a part of my personal news and information consumption habits.

Related writings

I’ve written a lot of stuff elsewhere about how to unlock information from one online source or another, and the general concepts of working on a more open, interoperable, standards-based web. If you enjoyed the above, you might like these:

- In rebuilding the open web, it’s up to technologists to make sure it’s also the best version of the web

- A proposed rubric for scoring sites on their commitment to the open web

- The importance of owning instead of renting our digital homes

- An example of why RSS is useful and important

- Personal banking needs an API

- Unlocking community event information from Facebook

Updated March 3, 2025 at 10 a.m. Eastern to note: Hacker News discussion